Hierarchical Encoding for mRNA Language Modeling (HELM): A Novel Pre-Training Strategy that Incorporates Codon-Level Hierarchical Structure into Language Model Training

Messenger RNA (mRNA) plays a crucial role in protein synthesis, translating genetic information into proteins via a process that involves sequences of nucleotides called codons. However, current language models used for biological sequences, especially mRNA, fail to capture the hierarchical structure of mRNA codons. This limitation leads to suboptimal performance when predicting properties or generating diverse mRNA sequences. mRNA modeling is uniquely challenging because of its many-to-one relationship between codons and the amino acids they encode, as multiple codons can code for the same amino acid but vary in their biological properties. This hierarchical structure of synonymous codons is crucial for mRNA’s functional roles, particularly in therapeutics like vaccines and gene therapies.

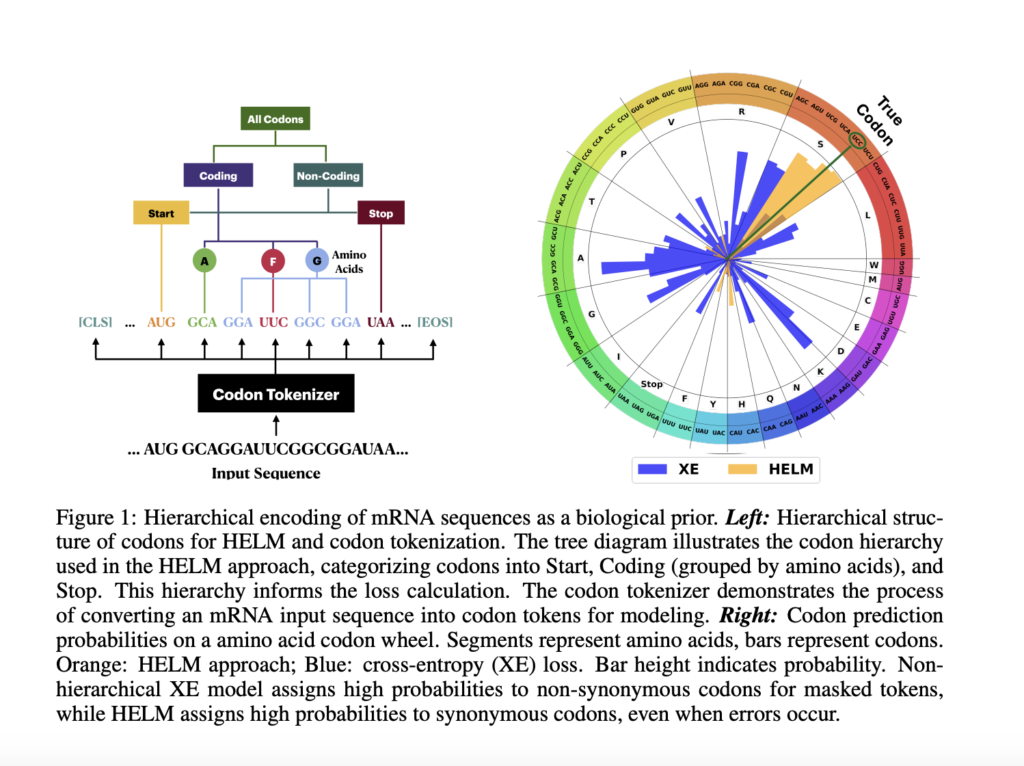

Researchers from Johnson & Johnson and the University of Central Florida propose a new approach to improve mRNA language modeling called Hierarchical Encoding for mRNA Language Modeling (HELM). HELM incorporates the hierarchical relationships of codons into the language model training process. This is achieved by modulating the loss function based on codon synonymity, which effectively aligns the training with the biological reality of mRNA sequences. Specifically, HELM modulates the error magnitude in its loss function depending on whether errors involve synonymous codons (considered less significant) or codons leading to different amino acids (considered more significant). The researchers evaluate HELM against existing mRNA models on various tasks, including mRNA property prediction and antibody region annotation, and find that it significantly improves performance—demonstrating around 8% better average accuracy compared to existing models.

The core of HELM lies in its hierarchical encoding approach, which integrates the codon structure directly into the language model’s training. This involves using a Hierarchical Cross-Entropy (HXE) loss, where mRNA codons are treated based on their positions in a tree-like hierarchy that represents their biological relationships. The hierarchy starts with a root node representing all codons, branching into coding and non-coding codons, with further categorization based on biological functions like “start” and “stop” signals or specific amino acids. During pre-training, HELM uses both Masked Language Modeling (MLM) and Causal Language Modeling (CLM) techniques, enhancing the training by weighting errors in proportion to the position of codons within this hierarchical structure. This ensures that synonymous codon substitutions are less penalized, encouraging a nuanced understanding of the codon-level relationships. Moreover, HELM retains compatibility with common language model architectures and can be seamlessly applied without major changes to existing training pipelines.

HELM was evaluated on multiple datasets, including mRNA related to antibodies and general mRNA sequences. Compared to non-hierarchical language models and state-of-the-art RNA foundation models, HELM demonstrated consistent improvements. On average, it outperformed standard pre-training methods by 8% in predictive tasks across six diverse datasets. For example, in antibody mRNA sequence annotation, HELM achieved an accuracy improvement of around 5%, indicating its capability to capture biologically relevant structures better than traditional models. HELM’s hierarchical approach also showed stronger clustering of synonymous sequences, which indicates that the model captures biological relationships more accurately. Beyond classification, HELM was also evaluated for its generative capabilities, showing that it can generate diverse mRNA sequences more accurately aligned with true data distributions compared to non-hierarchical baselines. The Frechet Biological Distance (FBD) was used to measure how well the generated sequences matched true biological data, and HELM consistently showed lower FBD scores, indicating closer alignment with real biological sequences.

The researchers conclude that HELM represents a significant advancement in the modeling of mRNA sequences, particularly in its ability to capture the biological hierarchies inherent to mRNA. By embedding these relationships directly into the training process, HELM achieves superior results in both predictive and generative tasks, while requiring minimal modifications to standard model architectures. Future work might explore more advanced methods, such as training HELM in hyperbolic space to better capture the hierarchical relationships that Euclidean space cannot easily model. Overall, HELM paves the way for better analysis and application of mRNA, with promising implications for areas such as therapeutic development and synthetic biology.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Trending] LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLMs) for Intel PCs

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.